The Interpretation of Research Results

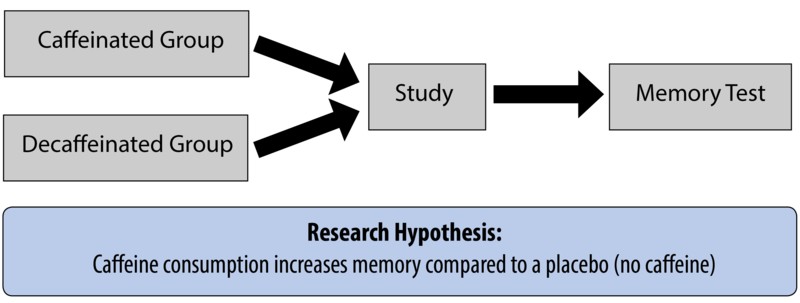

Imagine a researcher wanting to examine the hypothesis—a specific prediction based on previous research or scientific theory—that caffeine enhances memory. She knows there are several published studies that suggest this might be the case, and she wants to further explore the possibility. She designs an experiment to test this hypothesis. She randomly assigns some participants a cup of fully caffeinated tea and some a cup of herbal tea. All the participants are instructed to drink up, study a list of words, then complete a memory test. There are three possible outcomes of this proposed study:

The caffeine group performs better (support for the hypothesis).

The no-caffeine group performs better (evidence against the hypothesis).

There is no difference in the performance between the two groups (also evidence against the hypothesis).

Let’s look, from a scientific point of view, at how the researcher should interpret each of these three possibilities.

First, if the results of the memory test reveal that the caffeine group performs better, this is a piece of evidence in favor of the hypothesis: It appears, at least in this case, that caffeine is associated with better memory. It does not, however, prove that caffeine is associated with better memory. There are still many questions left unanswered. How long does the memory boost last? Does caffeine work the same way with people of all ages? Is there a difference in memory performance between people who drink caffeine regularly and those who never drink it? Could the results be a freak occurrence? Because of these uncertainties, we do not say that a study—especially a single study—proves a hypothesis. Instead, we say the results of the study offer evidence in support of the hypothesis. Even if we tested this across 10 thousand or 100 thousand people we still could not use the word “proven” to describe this phenomenon.

This is because inductive reasoning is based on probabilities. Probabilities are always a matter of degree; they may be extremely likely or unlikely. Science is better at shedding light on the likelihood—or probability—of something than at proving it. In this way, data is still highly useful even if it doesn’t fit Popper’s absolute standards.

The science of meteorology helps illustrate this point. You might look at your local weather forecast and see a high likelihood of rain. This is because the meteorologist has used inductive reasoning to create her forecast. She has taken current observations—lots of dense clouds coming toward your city—and compared them to historical weather patterns associated with rain, making a reasonable prediction of a high probability of rain. The meteorologist has not proven it will rain, however, by pointing out the oncoming clouds.

Proof is more associated with deductive reasoning. Deductive reasoning starts with general principles that are applied to specific instances (the reverse of inductive reasoning). When the general principles, or premises, are true, and the structure of the argument is valid, the conclusion is, by definition, proven; it must be so. A deductive truth must apply in all relevant circumstances. For example, all living cells contain DNA. From this, you can reason— deductively—that any specific living cell (of an elephant, or a person, or a snake) will therefore contain DNA. Given the complexity of psychological phenomena, which involve many contributing factors, it is nearly impossible to make these types of broad statements with certainty.

——————————————————————————————————————————————————

Test Yourself 2: Inductive or Deductive?

The stove was on and the water in the pot was boiling over. The front door was standing open. These clues suggest the homeowner left unexpectedly and in a hurry.

Gravity is associated with mass. Because the moon has a smaller mass than the Earth, it should have weaker gravity.

Students don’t like to pay for high priced textbooks. It is likely that many students in the class will opt not to purchase a book.

To earn a college degree, students need 100 credits. Janine has 85 credits, so she cannot graduate.

[See answer at end of this module]

——————————————————————————————————————————————————

The second possible result from the caffeine-memory study is that the group who had no caffeine demonstrates better memory. This result is the opposite of what the researcher expects to find (her hypothesis). Here, the researcher must admit the evidence does not support her hypothesis. She must be careful, however, not to extend that interpretation to other claims. For example, finding increased memory in the no-caffeine group would not be evidence that caffeine harms memory. Again, there are too many unknowns. Is this finding a freak occurrence, perhaps based on an unusual sample? Is there a problem with the design of the study? The researcher doesn’t know. She simply knows that she was not able to observe support for her hypothesis.

There is at least one additional consideration: The researcher originally developed her caffeine- benefits-memory hypothesis based on conclusions drawn from previous research. That is, previous studies found results that suggested caffeine boosts memory. The researcher’s single study should not outweigh the conclusions of many studies. Perhaps the earlier research employed participants of different ages or who had different baseline levels of caffeine intake. This new study simply becomes a piece of fabric in the overall quilt of studies of the caffeine- memory relationship. It does not, on its own, definitively falsify the hypothesis.

Finally, it’s possible that the results show no difference in memory between the two groups. How should the researcher interpret this? How would you? In this case, the researcher once again has to admit that she has not found support for her hypothesis.

Interpreting the results of a study—regardless of outcome—rests on the quality of the observations from which those results are drawn. If you learn, say, that each group in a study included only four participants, or that they were all over 90 years old, you might have concerns. Specifically, you should be concerned that the observations, even if accurate, aren’t representative of the general population. This is one of the defining differences between conclusions drawn from personal anecdotes and those drawn from scientific observations. Anecdotal evidence—derived from personal experience and unsystematic observations (e. g., “common sense,”)—is limited by the quality and representativeness of observations, and by memory shortcomings. Well-designed research, on the other hand, relies on observations that are systematically recorded, of high quality, and representative of the population it claims to describe.